Reproduction of objects, and Amazon S3 to Windows Azure Storage

this article describes how to copy an object and the basket (bucket) from Amazon S3 to store blobs in Windows Azure.

One of the major improvements after June 7, 2012 was the improvement of the function of Copy Blob. In writing this article I used materials, which can be found here: http://blogs.msdn.com/b/windowsazurestorage/archive/2012/06/12/introducing-asynchronous-cross-account-copy-blob.aspx. What caught my attention functionality copy blob allows you to copy blobs from the outside Windows Azure, if they are publicly available. That is, they do not need to be in Windows Azure.

the

It made me sit down and think.. That is now I have the option of simply transferring my files to storage blobs Windows Azure, with the majority of the work will be performed by the platform itself. So I thought, not necessary to write a simple app that tries to copy a file (object) from Amazon S3 to store blobs in Windows Azure. Getting it done in a few hours (hours because there was no account in S3 to a point, respectively, knowledge of the client library to store were somewhat limited). But what I want to say: it really works and is really simple to make.

I thought about a few scenarios in which you might need to copy content from one cloud provider to store blobs in Windows Azure.

With all the new features and lower prices Windows Azure storage – a real alternative to other cloud providers that cloud storage. This may be one of the reasons you go with the used provider to Windows Azure Storage. Either you have always wanted to go, but couldn't figure out how to transfer data from the store. A new function of Copy Blob makes the solution to this problem superposter.

Now you can easily use store blobs as the place to backup from your cloud storage. This was possible before, but it was quite a painful process, since it required a lot of code.

With the latest innovations it has become really simple. Now you do not need to write code for copying byte from a source to a BLOB in Windows Azure. All this makes the platform. You just say where the source and where it must be transferred, cause the copy function and all.

the

OK, enough talk! Let's look at the code. I created a simple console application (code below), but before I get to it, I would like to discuss a couple of moments. .

Before you run the code you need to make several actions:

1. the Create account store: please note that the new functionality will work only if the storage account is created after 7th June 2012 (why – see note, approx. translator).

2. the Download the latest version of storage client library: at the time of writing, officially satelitarnej version of the library is 1.7. Unfortunately, the new functionality it is not working, so you need to download 1.7.1 from GitHub. Download the source and compile.

3. the Check out the public accessibility of the object/BLOB: a function to Copy Blobs can copy only publicly accessible Windows Azure blobs from outside. Therefore, in the case of Amazon S3, you need to make sure that the object has a minimum level of READ for anonymous users.

As I said above, the code is very simple:

the

The code is quite simple. What I do: I specify the credentials to access Windows Azure storage BLOB and the URL of the source (Amazon S3); create a container of blobs in Windows Azure and start copying a BLOB from a URL source. After sending the request to copy everything that makes the app, it monitors the copy process. As you can see, a single line of code for copying byte from a source to a receiver. All this makes Windows Azure.

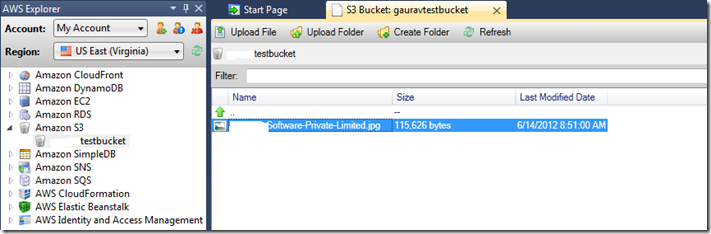

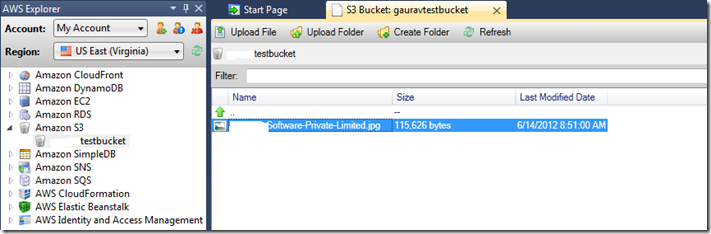

Here is the object in Amazon S3:

After the object is copied, I can see it in storage blobs Windows Azure using Cloud Storage Studio.

As you can see, Windows Azure provides the ability to easily transfer data. If you are considering migrating from another cloud platform on Windows Azure, but was worried about all sorts of related problems, one problem became less.

I created a simple example which copies a single file from Amazon S3 to store blobs in Windows Azure. This functionality can be extended to copy all objects from Amazon S3 in a container of blobs Windows Azure. Let's move on to the next article – how to copy a basket of Amazon S3 to store blobs in Windows Azure using Copy Blob.

Before you run the code you need to make several actions:

The code is very simple. Note that it is only for demonstration. Can modify it as you need.

the

The code is quite simple. Application:

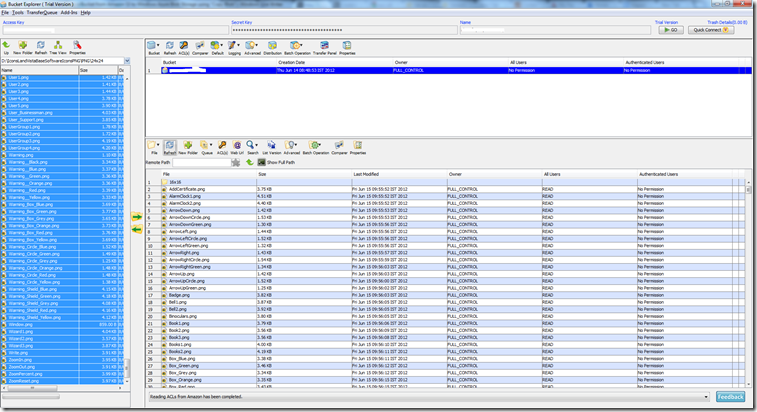

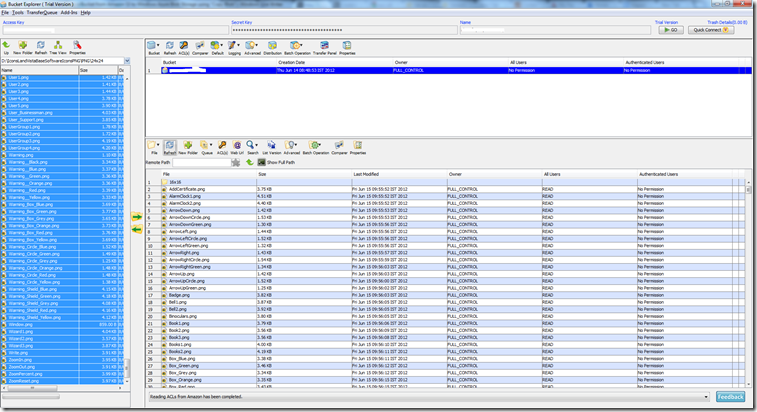

Content management in Amazon S3 I used Bucket Explorer, which showed the following status:

After copying the next state of the storage blobs Windows Azure showed Cloud Storage Studio.

Summary

I created a simple example that copies all the contents from Amazon S3 to store blobs in Windows Azure.

Summary of translator

Emotions Gaurava about the new features of the API are clear — to be honest, until 7 June 2012 the API to use the storage services sometimes limping on the functionality, as evidenced by the copy series of the same Gaurava on the comparison of storages of Windows Azure and Amazon. Now the ability to use Windows Azure storage as the same place for redundancy, but still with the ability to adjust the degree of redundancy (now there are two types), to avoid, probably, the effects of any disaster and to create a truly accusatorily (:) service.

a note from the translator

Services Windows Azure storage can accept requests that specify a specific version of each operation (service), the developer may specify a version using the header x-ms-version. Naturally, the functionality of the different versions and arrangements of the work (not conceptual) may vary.

It is necessary to consider the rules of the system in which the service of the blobs determines which version to use to interpret the SAS parameters:

1. If the request has the header x-ms-version, but no signedversion used version 2009-07-17 for interpretation of parameters of SAS, using the version specified in the x-ms-version.

2. If there is no request header x-ms-version and no signedversion, but the owner of the account set the version using Set Blob Service Properties operation will be used 2009-07-07 version for interpretation of parameters of SAS, using the version specified by the account holder for the service.

3. If there is no request header x-ms-version, no signedversion and the account owner did not install version of the service will use the version 2009-07-17 for interpretation of parameters of SAS. If the container has public rights and access restrictions have been established using the Set Container ACL operation using version 2009-09-19 (or newer).be used version 2009-09-19 of operations.

read More about versions and their supported functionality.

Translation of relevant posts Gaurav Mantri:

Objekty

Corzine

Article based on information from habrahabr.ru

One of the major improvements after June 7, 2012 was the improvement of the function of Copy Blob. In writing this article I used materials, which can be found here: http://blogs.msdn.com/b/windowsazurestorage/archive/2012/06/12/introducing-asynchronous-cross-account-copy-blob.aspx. What caught my attention functionality copy blob allows you to copy blobs from the outside Windows Azure, if they are publicly available. That is, they do not need to be in Windows Azure.

the

IS VERY COOL!!!

It made me sit down and think.. That is now I have the option of simply transferring my files to storage blobs Windows Azure, with the majority of the work will be performed by the platform itself. So I thought, not necessary to write a simple app that tries to copy a file (object) from Amazon S3 to store blobs in Windows Azure. Getting it done in a few hours (hours because there was no account in S3 to a point, respectively, knowledge of the client library to store were somewhat limited). But what I want to say: it really works and is really simple to make.

Why do it?

I thought about a few scenarios in which you might need to copy content from one cloud provider to store blobs in Windows Azure.

Storage blobs Windows Azure is really competitive and

With all the new features and lower prices Windows Azure storage – a real alternative to other cloud providers that cloud storage. This may be one of the reasons you go with the used provider to Windows Azure Storage. Either you have always wanted to go, but couldn't figure out how to transfer data from the store. A new function of Copy Blob makes the solution to this problem superposter.

Storage blobs Windows Azure as a backup for existing data

Now you can easily use store blobs as the place to backup from your cloud storage. This was possible before, but it was quite a painful process, since it required a lot of code.

With the latest innovations it has become really simple. Now you do not need to write code for copying byte from a source to a BLOB in Windows Azure. All this makes the platform. You just say where the source and where it must be transferred, cause the copy function and all.

the

Show me the code!

OK, enough talk! Let's look at the code. I created a simple console application (code below), but before I get to it, I would like to discuss a couple of moments. .

Prerequisites

Before you run the code you need to make several actions:

1. the Create account store: please note that the new functionality will work only if the storage account is created after 7th June 2012 (why – see note, approx. translator).

2. the Download the latest version of storage client library: at the time of writing, officially satelitarnej version of the library is 1.7. Unfortunately, the new functionality it is not working, so you need to download 1.7.1 from GitHub. Download the source and compile.

3. the Check out the public accessibility of the object/BLOB: a function to Copy Blobs can copy only publicly accessible Windows Azure blobs from outside. Therefore, in the case of Amazon S3, you need to make sure that the object has a minimum level of READ for anonymous users.

Code

As I said above, the code is very simple:

the

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Microsoft.WindowsAzure;

using Microsoft.WindowsAzure.StorageClient;

namespace CopyAmazonObjectToBlobStorage

{

class Program

{

private static string azureStorageAccountName = “";

private static string azureStorageAccountKey = "";

private static string azureBlobContainerName = "";

private static string amazonObjectUrl = "";

private static string azureBlobName = "";

static void Main(string[] args)

{

CloudStorageAccount csa = new CloudStorageAccount(new StorageCredentialsAccountAndKey(azureStorageAccountName, azureStorageAccountKey), true);

The BLOB client CloudBlobClient = csa.CreateCloudBlobClient();

var blobContainer = the BLOB client.GetContainerReference(azureBlobContainerName);

Console.WriteLine("Trying to create the blob container....");

blobContainer.CreateIfNotExist();

Console.WriteLine("Blob container created....");

var blockBlob = blobContainer.GetBlockBlobReference(azureBlobName);

Console.WriteLine("Created a reference for block blob in Windows Azure....");

Console.WriteLine("Blob Uri:" + blockBlob.Uri.AbsoluteUri);

Console.WriteLine("Now trying to initiate copy....");

blockBlob.StartCopyFromBlob(new Uri(amazonObjectUrl), null, null, null);

Console.WriteLine("Copy started....");

Console.WriteLine("Now tracking BLOB's copy progress....");

bool continueLoop = true;

while (continueLoop)

{

Console.WriteLine("");

Console.WriteLine("Fetching lists of blobs in Azure blob container....");

var blobsList = blobContainer.ListBlobs(true, BlobListingDetails.Copy);

foreach (var blob in blobsList)

{

var tempBlockBlob = (CloudBlob) blob;

var destBlob = blob as CloudBlob;

if (tempBlockBlob.Name == azureBlobName)

{

var copyStatus = tempBlockBlob.CopyState;

if (copyStatus != null)

{

Console.WriteLine("Status of blob copy...." + copyStatus.Status);

var percentComplete = copyStatus.BytesCopied / copyStatus.TotalBytes;

Console.WriteLine("Total bytes to copy...." + copyStatus.TotalBytes);

Console.WriteLine("Total bytes copied...." + copyStatus.BytesCopied);

if (copyStatus.Status != CopyStatus.Pending)

{

continueLoop = false;

}

}

}

}

Console.WriteLine("");

Console.WriteLine("==============================================");

System.Threading.Thread.Sleep(1000);

}

Console.WriteLine("Process completed....");

Console.WriteLine("Press any key to terminate the program....");

Console.ReadLine();

}

}

}

The code is quite simple. What I do: I specify the credentials to access Windows Azure storage BLOB and the URL of the source (Amazon S3); create a container of blobs in Windows Azure and start copying a BLOB from a URL source. After sending the request to copy everything that makes the app, it monitors the copy process. As you can see, a single line of code for copying byte from a source to a receiver. All this makes Windows Azure.

Here is the object in Amazon S3:

After the object is copied, I can see it in storage blobs Windows Azure using Cloud Storage Studio.

As you can see, Windows Azure provides the ability to easily transfer data. If you are considering migrating from another cloud platform on Windows Azure, but was worried about all sorts of related problems, one problem became less.

I created a simple example which copies a single file from Amazon S3 to store blobs in Windows Azure. This functionality can be extended to copy all objects from Amazon S3 in a container of blobs Windows Azure. Let's move on to the next article – how to copy a basket of Amazon S3 to store blobs in Windows Azure using Copy Blob.

Prerequisites

Before you run the code you need to make several actions:

-

the

- Check out the public accessibility of the object/BLOB: a function to Copy Blobs can copy only publicly accessible Windows Azure blobs from outside. Therefore, in the case of Amazon S3, you need to make sure that the object has a minimum level of READ for anonymous users. the

- New account storage: please note that the new functionality will work only if the storage account is created after 7th June 2012 (why – see note, approx. translator). the

- Download the latest version of storage client library: at the time of writing, officially satelitarnej version of the library is 1.7. Unfortunately, the new functionality it is not working, so you need to download 1.7.1 from GitHub. Download the source and compile.

next Hold the credentials for Amazon: you will Need to use Amazon AccessKey and SecretKey to get the list of objects stored in Amazon S3.

Code

The code is very simple. Note that it is only for demonstration. Can modify it as you need.

the

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Amazon.S3;

using Amazon.S3.Model;

using Amazon.S3.Transfer;

using Amazon.S3.Util;

using Microsoft.WindowsAzure;

using Microsoft.WindowsAzure.StorageClient;

using System.Globalization;

namespace CopyAmazonBucketToBlobStorage

{

class Program

{

private static string azureStorageAccountName = “”;

private static string azureStorageAccountKey = “”;

private static string azureBlobContainerName = “”;

private static string amazonAccessKey = “”;

private static string amazonSecretKey = “”;

private static string amazonBucketName = “”;

private static string objectUrlFormat = “https://{0}.s3.amazonaws.com/{1}”;

private static Dictionary<string, CopyBlobProgress> CopyBlobProgress;

static void Main(string[] args)

{

//Reference the account Windows Azure storage

AzureCloudStorageAccount CloudStorageAccount = new CloudStorageAccount(new StorageCredentialsAccountAndKey(azureStorageAccountName, azureStorageAccountKey), true);

//Get a reference to the BLOB container where the objects will be copied.

var blobContainer = azureCloudStorageAccount.CreateCloudBlobClient().GetContainerReference(azureBlobContainerName);

//Create BLOB container, if necessary.

Console.WriteLine("Trying to create the blob container....");

blobContainer.CreateIfNotExist();

Console.WriteLine("Blob container created....");

//Create links to the Amazon customer

AmazonS3Client amazonClient = new AmazonS3Client(amazonAccessKey, amazonSecretKey);

//Initialization dictionary

CopyBlobProgress = new Dictionary<string, CopyBlobProgress>();

string continuationToken = null;

continueListObjects bool = true;

//Because ListObjects may return at a time no more than 1000 objects, //the function call until, until you have returned all the objects.

while (continueListObjects)

{

RequestToListObjects ListObjectsRequest = new ListObjectsRequest()

{

BucketName = amazonBucketName,

Marker = continuationToken,

MaxKeys = 100,

};

Console.WriteLine("Trying to list objects from S3 Bucket....");

//Get list of objects in Amazon S3

var listObjectsResponse = amazonClient.ListObjects(requestToListObjects);

//List of objects

var objectsFetchedSoFar = listObjectsResponse.S3Objects;

Console.WriteLine("Object listing complete. Now starting the copy process....");

//Watch, is not available any more objects. First, process the objects, then proceed to the next lot using the continuation token.

continuationToken = listObjectsResponse.NextMarker;

foreach (var s3Object in objectsFetchedSoFar)

{

string objectKey = s3Object.Key;

//ListObjects returns the files and folders of ignored folders, checking the value of the S3 Object Key. If it ends with /, assume it's folder.

if (!objectKey.EndsWith("/"))

{

//generated URL to copy

urlToCopy string = string.Format(CultureInfo.InvariantCulture, objectUrlFormat, amazonBucketName, objectKey);

//Create an instance of CloudBlockBlob

CloudBlockBlob blockBlob = blobContainer.GetBlockBlobReference(objectKey);

var blockBlobUrl = blockBlob.Uri.AbsoluteUri;

if (!CopyBlobProgress.ContainsKey(blockBlobUrl))

{

CopyBlobProgress.Add(blockBlobUrl, new CopyBlobProgress()

{

Status = CopyStatus.NotStarted,

});

//Run the copy. Wrap it in a try/catch block

//as the copy from Amazon S3 will be made only to publicly available objects.

try

{

Console.WriteLine(string.Format("Trying to copy \"{0}\" to \"{1}\"", urlToCopy, blockBlobUrl));

blockBlob.StartCopyFromBlob(new Uri(urlToCopy));

CopyBlobProgress[blockBlobUrl].Status = CopyStatus.Started;

}

catch (Exception exception)

{

CopyBlobProgress[blockBlobUrl].Status = CopyStatus.Failed;

CopyBlobProgress[blockBlobUrl].Error = exception;

}

}

}

}

Console.WriteLine("");

Console.WriteLine("==========================================================");

Console.WriteLine("");

Console.WriteLine("Checking the status of copy process....");

//Monitor the process

checkForBlobCopyStatus bool = true;

while (checkForBlobCopyStatus)

{

//Get the list of blobs in a container of blobs

var blobsList = blobContainer.ListBlobs(true, BlobListingDetails.Copy);

foreach (var blob in blobsList)

{

var tempBlockBlob = blob as CloudBlob;

var copyStatus = tempBlockBlob.CopyState;

if (CopyBlobProgress.ContainsKey(tempBlockBlob.Uri.AbsoluteUri))

{

var copyBlobProgress = CopyBlobProgress[tempBlockBlob.Uri.AbsoluteUri];

if (copyStatus != null)

{

Console.WriteLine(string.Format("Status of \"{0}\" blob copy....", tempBlockBlob.Uri.AbsoluteUri, copyStatus.Status));

switch (copyStatus.Status)

{

case Microsoft.WindowsAzure.StorageClient.CopyStatus.Aborted:

if (copyBlobProgress != null)

{

copyBlobProgress.Status = CopyStatus.Aborted;

}

break;

case Microsoft.WindowsAzure.StorageClient.CopyStatus.Failed:

if (copyBlobProgress != null)

{

copyBlobProgress.Status = CopyStatus.Failed;

}

break;

case Microsoft.WindowsAzure.StorageClient.CopyStatus.Invalid:

if (copyBlobProgress != null)

{

copyBlobProgress.Status = CopyStatus.Invalid;

}

break;

case Microsoft.WindowsAzure.StorageClient.CopyStatus.Pending:

if (copyBlobProgress != null)

{

copyBlobProgress.Status = CopyStatus.Pending;

}

break;

case Microsoft.WindowsAzure.StorageClient.CopyStatus.Success:

if (copyBlobProgress != null)

{

copyBlobProgress.Status = CopyStatus.Success;

}

break;

}

}

}

}

var pendingBlob = CopyBlobProgress.FirstOrDefault(c => c.Value.Status == CopyStatus.Pending);

if (string.IsNullOrWhiteSpace(pendingBlob.Key))

{

checkForBlobCopyStatus = false;

}

else

{

System.Threading.Thread.Sleep(1000);

}

}

if (string.IsNullOrWhiteSpace(continuationToken))

{

continueListObjects = false;

}

Console.WriteLine("");

Console.WriteLine("==========================================================");

Console.WriteLine("");

}

Console.WriteLine("Process completed....");

Console.WriteLine("Press any key to terminate the program....");

Console.ReadLine();

}

}

public class CopyBlobProgress

{

public CopyStatus Status

{

get;

set;

}

public Exception Error

{

get;

set;

}

}

public enum CopyStatus

{

NotStarted,

Started

Aborted,

Failed,

Invalid

Pending,

Success

}

}

The code is quite simple. Application:

-

the

- Creates a BLOB container in Windows Azure storage, if needed. the

- Gets the list of objects from Amazon S3. Please note that Amazon S3 can return in a single function call to 1000 objects, otherwise returns continuation token used to retrieve a token that indicates the next subset of the entities. the

- For each returned object formed URL that is used for function of Copy Blob. the

- the Application checks the status of the copy process. After all the processes of copying, steps 2-4 are repeated until, until all objects will not be copied.

Content management in Amazon S3 I used Bucket Explorer, which showed the following status:

After copying the next state of the storage blobs Windows Azure showed Cloud Storage Studio.

Summary

I created a simple example that copies all the contents from Amazon S3 to store blobs in Windows Azure.

Summary of translator

Emotions Gaurava about the new features of the API are clear — to be honest, until 7 June 2012 the API to use the storage services sometimes limping on the functionality, as evidenced by the copy series of the same Gaurava on the comparison of storages of Windows Azure and Amazon. Now the ability to use Windows Azure storage as the same place for redundancy, but still with the ability to adjust the degree of redundancy (now there are two types), to avoid, probably, the effects of any disaster and to create a truly accusatorily (:) service.

a note from the translator

Services Windows Azure storage can accept requests that specify a specific version of each operation (service), the developer may specify a version using the header x-ms-version. Naturally, the functionality of the different versions and arrangements of the work (not conceptual) may vary.

It is necessary to consider the rules of the system in which the service of the blobs determines which version to use to interpret the SAS parameters:

1. If the request has the header x-ms-version, but no signedversion used version 2009-07-17 for interpretation of parameters of SAS, using the version specified in the x-ms-version.

2. If there is no request header x-ms-version and no signedversion, but the owner of the account set the version using Set Blob Service Properties operation will be used 2009-07-07 version for interpretation of parameters of SAS, using the version specified by the account holder for the service.

3. If there is no request header x-ms-version, no signedversion and the account owner did not install version of the service will use the version 2009-07-17 for interpretation of parameters of SAS. If the container has public rights and access restrictions have been established using the Set Container ACL operation using version 2009-09-19 (or newer).be used version 2009-09-19 of operations.

read More about versions and their supported functionality.

Translation of relevant posts Gaurav Mantri:

Objekty

Corzine

Комментарии

Отправить комментарий